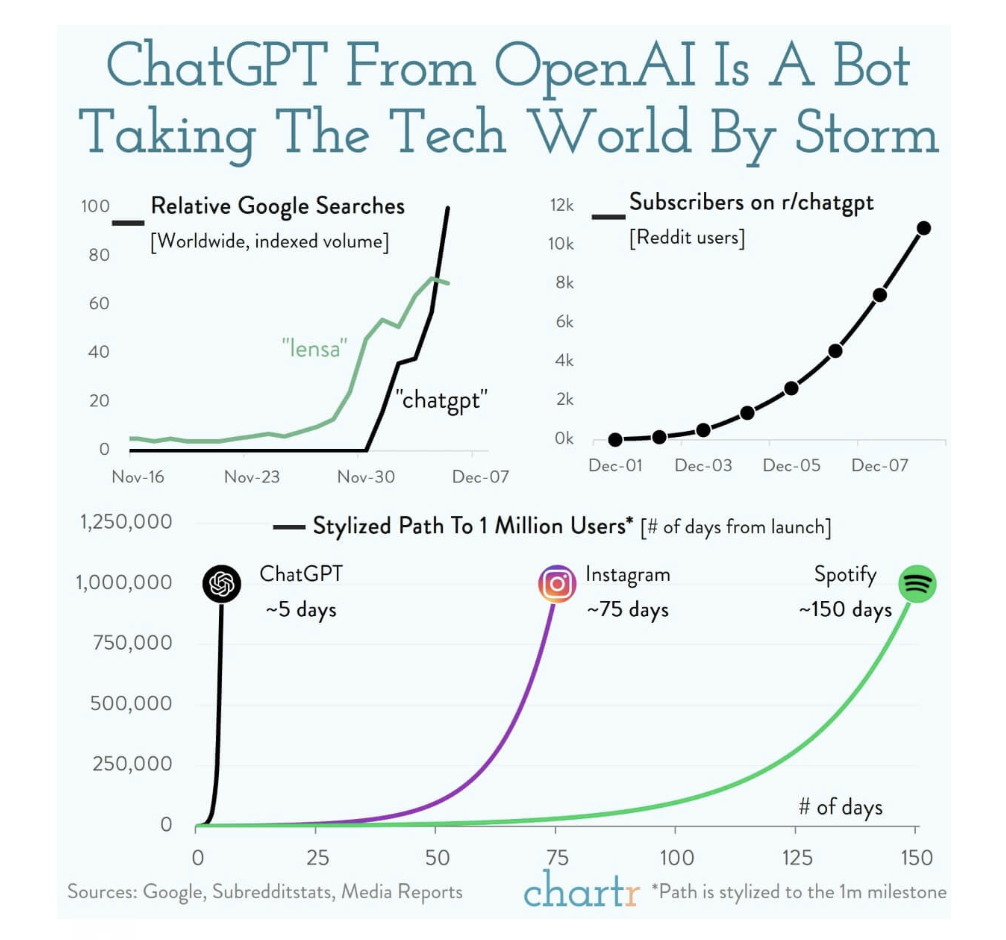

November 30, 2022, will be remembered as a pivotal point in time when OpenAI launched ChatGPT, demonstrating the power of its Generative AI. The following chart is a testament to the enthusiasm to take the conversational bot out for a test ride:

Overnight, users have started exploring ChatGPT in fields as diverse as – Business school exams, testing developer’s skills, composing business emails, or in as far out areas as creating a draft order for a Judge or turning out a complete draft Bill for a lawmaker!

The Generative AI powering ChatGPT is capable of generating something on its own when prompted. This is distinct from the familiar Discriminative AI that classifies the input data into different categories. Both the models make predictions – Generative AI seeks to predict what will come next in a given sequence of data (or words) while the DAI determines the class the given data set is likely to fall into.

The technology underpinning Generative AI is powerful enough for generating text, images, videos, and music based on the text input.

ChatGPT is designed to function with human-like dialogue, going back and forth collecting inputs with the user. This provides the user a path to interact with the bot’s underlying Large Language Model. This conversational feature intjects human feedback (powering the Reinforcement Learning in the AI lingo).

Armed with the series of text input as prompts, the AI Bot then generates longform text, articles and stories on a wide range of topics. According to its creator, ChatGPT was trained on a very large amount of text available till 2021 (when apparently its training on outside data stopped).

Bard Singing… But Where is MusicML?

ChatGPT’s entry created a frenzy in the AI space. Several companies that were toying with similar ideas felt forced to launch their own App to be not left too far behind. Google fast-tracked Bard, its own AI chatbot (powered by LaMDA or its Language model) facilitating similar dialogue based human interaction.

As Google CEO extolled Bard’s virtues “Bard can be an outlet for creativity, and a launchpad for curiosity…”

Google is reportedly working on MusicML – a Generative AI model that could generate music from the text input. However, given the complexity of the Copyright Laws, this may take longer to see the light of the day.

Quora is on the verge of releasing Poe, its own AI Platform to generate detailed answers to the questions that pop up on their platform.

Baidu, Tencent, Alibaba and a number of companies have announced that they will soon be coming out with their own Generative AI based Apps in the very near future. Clearly content generation (as opposed to its creation) is entering into an uncharted space!

Upending Search?

Computationally, the Discriminative AI model is less expensive than Generative AI. In the last decade or so, a wide range of applications using The DAI model have sprung up across a wide spectrum of industries. The Machine Learning Models today are powering almost all decisions individuals or businesses are making every day. You just cannot escape it.

Generative AI is going to upend the search space. It is no coincidence that Microsoft CEO while introducing the next generation of Bing Search and Edge browser threw down the gauntlet – This new Bing will make Google come out and dance, and I want people to know that we made them dance.

The technology is capable of generating a detailed answer with the full context given a search query. Would users still opt for wading through a search result page full of links looking for the proverbial needle?

Search and Research, as we know, will get completely redefined along with the business models underlying the search technology. However, at the current state-of-the-art (whether in hardware or in the ML Models) transition to Generative AI based system could result in significant costs.

Verbosity and Hallucination

Since all AI models are based on pattern matching at a deeper level, getting the right answer is critically dependent on how you phrase your query. As per the caveat from the ChatGPT creator –

“[it] is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

Clearly based on the current state-of-the-art, building the exact query to get the precise answer (with fewer edits or false starts) from its underlying Large Language Model will require honing the skills in accurately phrasing your intent.

Given that Generative AI creates the answers by laying down the words or phrase next to the previous one, it is hardly a surprise that its answers could tend to be verbose. OpenAI has cautioned that the bot “sometimes writes plausible sounding but incorrect or nonsensical answers” or “the model is often excessively verbose and overuses certain phrase.” It may lack the context to know when to shut up!

Google warned that the technology is prone to producing convincing but totally fictitious results. As Microsoft has warned that the Bot may wander into the territory resembling hallucination – prompting entry of the phrase AI Hallucination into the Lexicon!

Miles to go….

Move Fast with Caution and Not Break Things

While curiosity in Generative AI is going through the roof, businesses do not seem to be too eager to jump headlong into the skyrocketing enthusiasm. And rightly so, as any error, even the minor ones, could have debilitating consequences. An incorrect answer from Bard during its demo saw Google losing $100B in its market cap in just one day!

Today, Discriminative AI is powering Sales, Marketing, HR, e-Commerce, Medical Research, Customer Experience, and Support functions in the B2B domain. It is providing the first line of defense in Fraud prevention and functions as a highly effective Cyber sentry.

Despite its use across the spectrum, the clear business benefits are seen only in very specific use cases. The issue is not so much with the algorithms themselves but in the quality (and quantity) of training that requires time and effort (read cost) to be able to power the Algorithm to produce the most accurate results.

CX and Support functions stand to benefit greatly from Machine Learning. Both require serving customers through super personalization and accurate information for problem solving…and serving those fast.

Generally, answers to 80% of our questions exist today. Both search engines powered with Machine Learning do a great job in fishing the answers out with a varying degree of accuracy. Generative AI is poised to take this to the next level.

That leaves us still searching for answers to the balance of 20% of unknowns (that, as per the Pareto Principle, could be responsible for 80% of consequences). Those answers will have to wait for the truly Artificial General Intelligence (AGI), that will be creative enough to take on the intellectual tasks that humans are capable of.

Until then, Humans will continue to do the heavy lifting.