I’m going to put this as nicely as I can: SaaS help seekers are not the best searchers, especially when they’re in an enterprise support portal or community. I can say this with confidence because I’ve been analyzing user queries within support portals and communities for almost a decade. More often than not, people perform one or two-word searches that are too generic to deliver relevant results. Why? Because relevance depends on context, and context points to something specific, and generic queries are the opposite of specific.

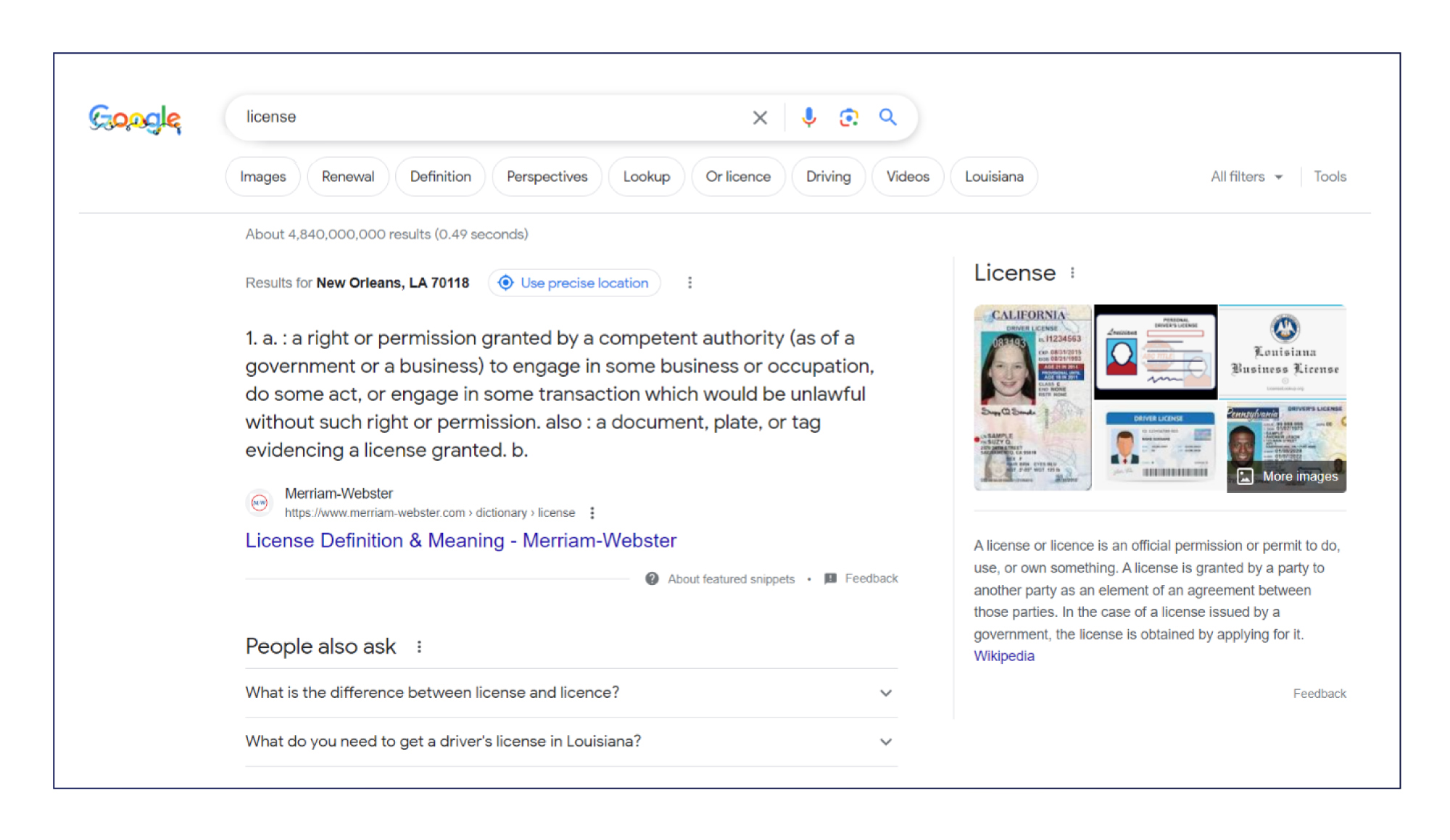

Let’s walk through an example together. Go to Google and search for “license,” no quotation marks needed. What do your results look like? This is what I see:

We have the dictionary definition of a license at the top, a knowledge panel on the right, and other suggested questions in the middle. Are these results relevant to me?

Nope! Sorry, Google. You did your best.

But how can this be? How could the almighty Google fail the relevance test?

Because my query lacked context. Google hedged its bets. A dictionary definition might be helpful as a place to start. It also could be that most queries containing licenses are about driver’s licenses, so that seems logical to put out there. And, hey! A little personalization flair: Merriam-Webster is my go-to dictionary, and Louisiana is my home state. So Google tried to take reasonable guesses and personalize the experience, but it didn’t have enough context to detect my intent.

What I was looking for was information about some software I use; let’s call it Acme Software. The license is about to expire, but when I tried to renew it, I got an error, and now I’m stuck.

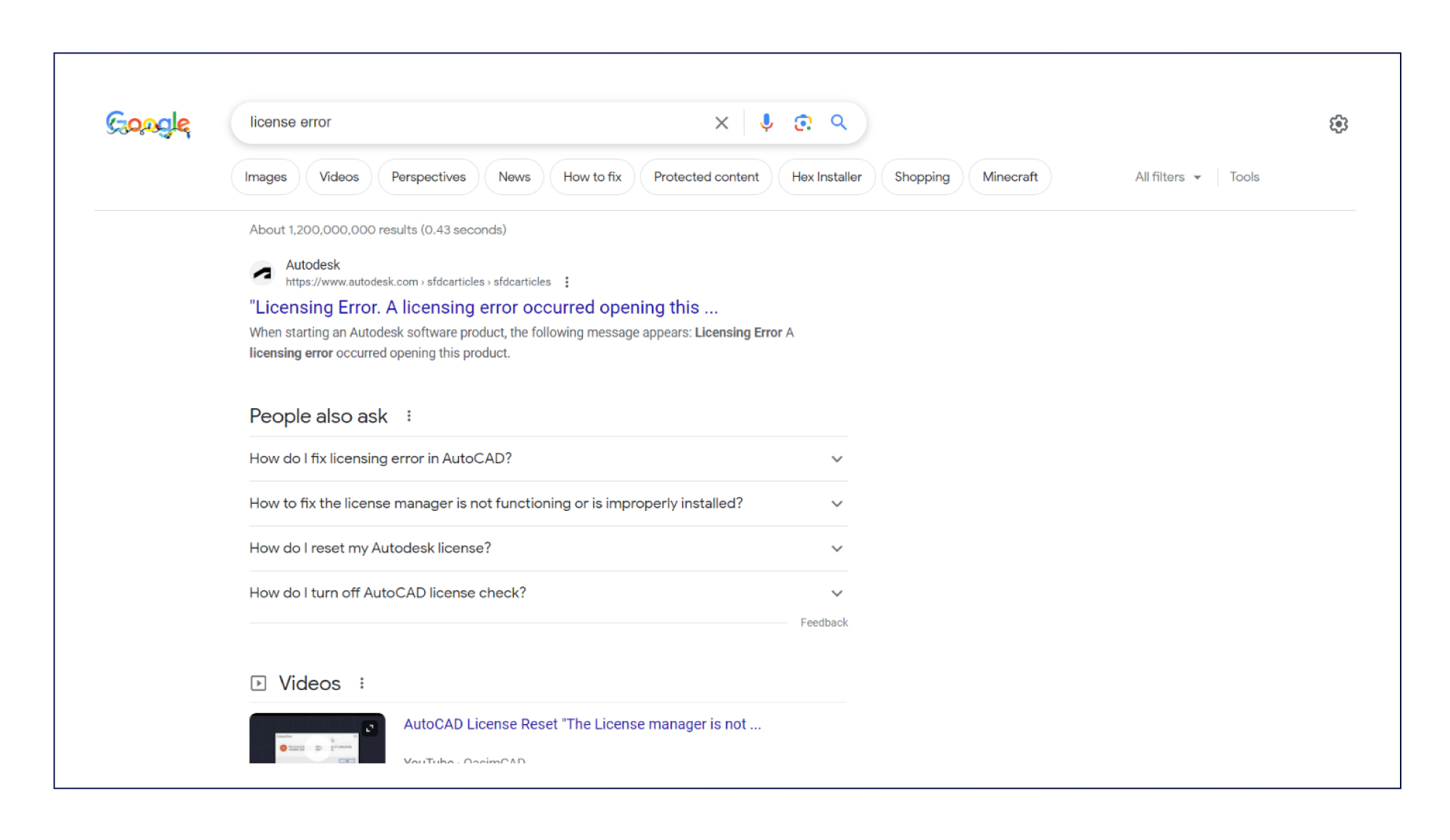

Oh! Would it have been better if I had searched for “license error” instead?

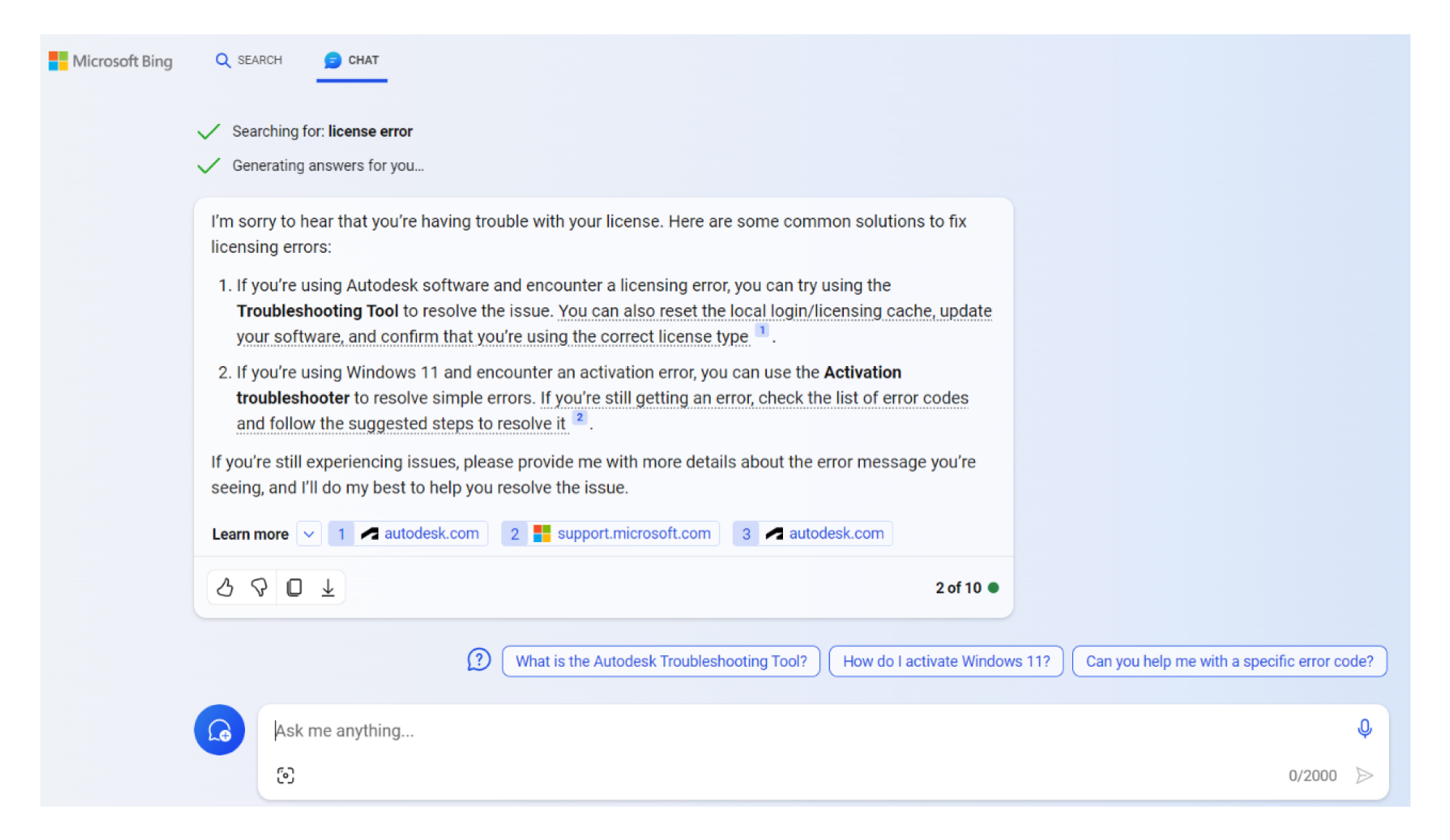

Ah, no. Wrong again. The results are even worse.

I don’t know how it settled on Autodesk. It must be a popular query with clicks because it’s not personalized to me. I don’t use it, and I’ve never before searched for it. It could be that other users first searched for “license” then revised it to “license error” and ended up clicking on Autodesk content.

I’m willing to bet many of you reading this piece are thinking, “But I would never go to Google and just type in ‘license.’ That doesn’t make any sense. You have to include more information or you’ll never get relevant results.”

To this I say, “AHA!!!” That’s it exactly. No one goes into Google and searches for “license.” Doing so would beg the question, “License for what or to do what?”

Searchers instinctively give Google more context than they give a company’s support portal. Maybe they figure that, because the Internet is vast, they need to include some specifics to receive helpful results. But when they’re in a support portal, and maybe even authenticated, they think that we should intuit what the heck they’re talking about – that we should, to use the industry terminology, be able to detect their intent?

But why would one think this? I mean, personalized content delivery is a thing, but when a company has dozens of products, at least a few user profiles, and thousands of knowledge base articles and other content, it’s unreasonable to assume that one word is going to get you what you need.

As Google did, I suppose you could surface a direct answer for one-word queries, such as a dictionary definition or knowledge panel, but the answer would be a guess.

There’s a sizable contingent of folks that will point to this exercise and say that this is exactly why we are facing THE END OF SEARCH: that search has outlived its usefulness now that GenAI is here. They say we no longer have to search because GenAI just gives us the answer.

I say this is a load of hooey. GenAI isn’t approaching us, reading our minds, then giving us an answer based on what it found in there. We are approaching it. We are going to OpenAI’s ChatGPT or Bing Chat or Google Bard and entering queries. What is this activity other than searching? Call it a prompt or a question or a problem statement. Call it what you will; it’s a search.

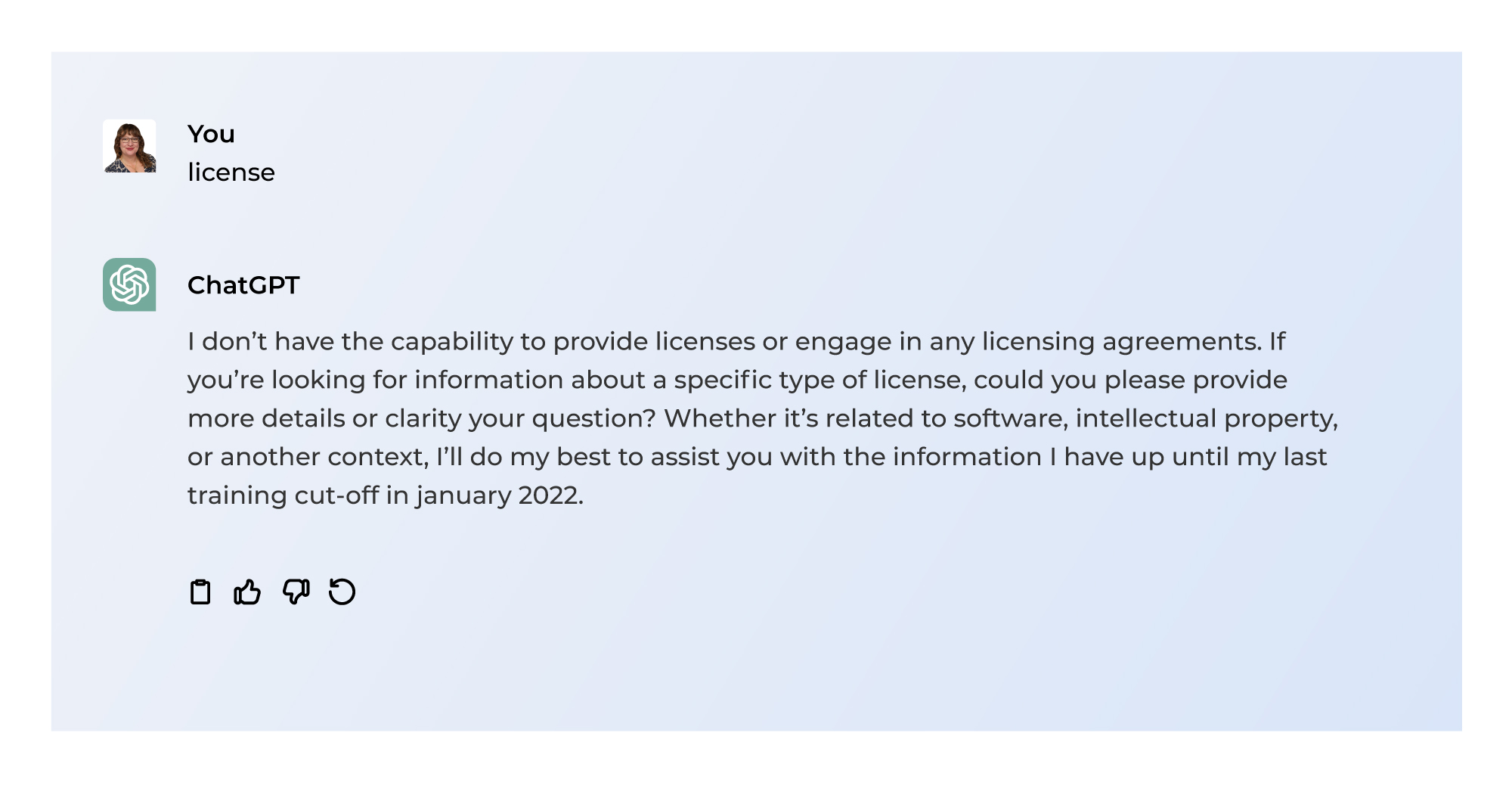

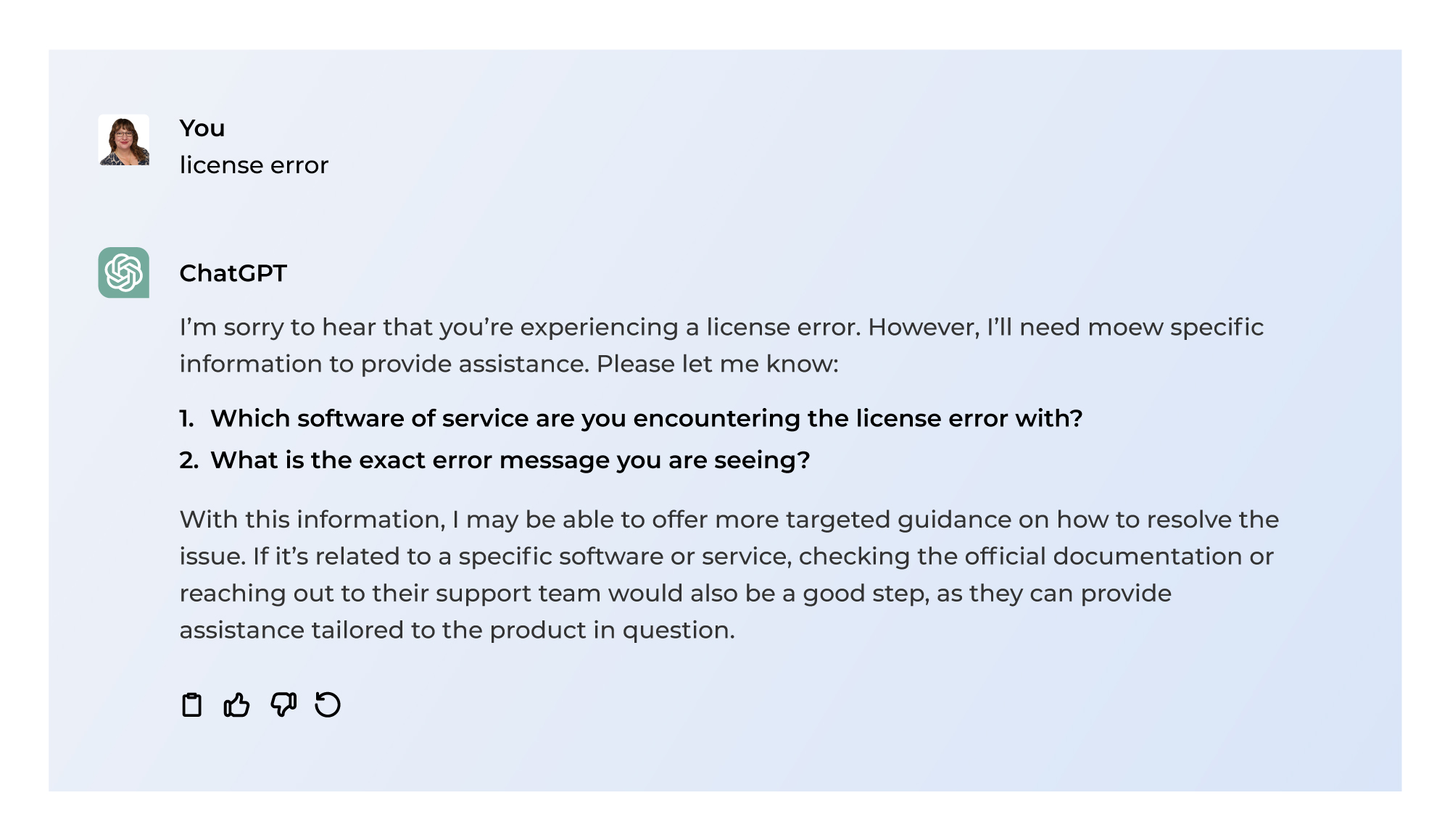

So what happens if you treat ChatGPT and Bing as poorly as we did Google? Here they are handling “license”:

And “license error”:

The big differentiator between Google and ChatGPT 3.5 and Bing Chat is that the latter two ask follow up questions and/or for clarification. In other words, ChatpGPT 3.5 and Bing Chat deploy the GenAI equivalent of crossing guards. Further, while Google always hazards a guess, and ChatGPT 3.5 seems to resist guessing, Bing Chat tends to take the middle road: It’ll give you some general information but also give you the opportunity to provide more details so that it can deliver a more specific answer.

I like this approach. It’s the best of both worlds.

Now that we’ve reached the end of this experiment, what have we learned? My soundest advice: Even with the slickest technology, be prepared for searches that lack context; i.e., account for established user behavior.

- Specific prompts may lead to more specific queries. Instead of putting “Search” in your search bar’s placeholder text, try “Ask a question or enter a problem statement.”

- Deliver a rich panel that features a definition or general information alongside a list of linked results.

- If you deploy GenAI search or chat with the objective of providing a relevant direct answer, fine-tune it to prompt for clarification or to ask follow up questions.

As we draw near the end of 2023, my prediction for the next year or two is that the artificial distinction between traditional search and GenAI-powered chat will shrink. User behavior in the form of more contextual searches may or may not improve in your enterprise support portal, but with crossing guards in place, you’re sure to get more folks quickly and safely to their destination.