Know the latest trends and best practices in customer support

Organizations are in a race to adopt Large Language Models (LLMs). While

organizations stand to gain a lot of productivity improvements through LLMs when a user

question is directly sent to the open-source LLM, there is increased potential for

hallucinated responses based on the generic dataset the LLM was trained on.

This is

where SUVA’s Federated Retrieval Augmented Generation approach to LLMs comes into play. By

enhancing the user input with context from a 360-degree view of the enterprise knowledge

base, the LLM-integrated SUVA can more readily generate a contextual response with factual

content.

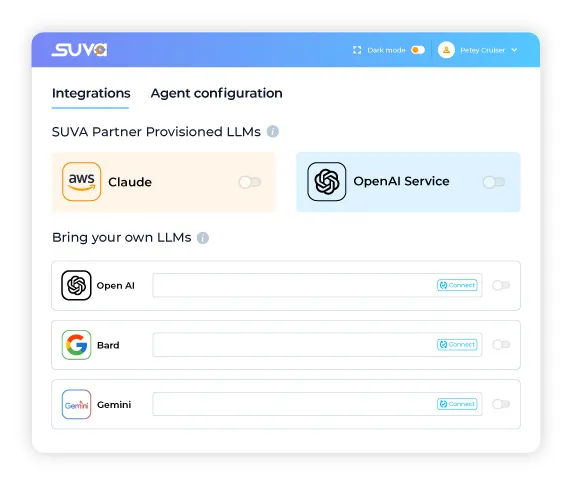

SUVA supports plug-and-play integration with leading public LLMs (such as BARD, Open AI, and open-source models hosted on Hugging Face), partner-provisioned LLMs (such as Claude and Azure), and our in-house inference models. This means you can easily kickstart leveraging SUVA’s LLM-infused capabilities with a plug-and-play integration by just inputting the API for your LLM.

With SUVA, an easy-to-use UI setting ensures admins get access to temperature control, which is a parameter used in chatbot interactions to adjust the randomness and creativity of the responses generated by the model. This ensures that admins can control the variability in responses based on user persona, use maturity, enterprise use case, and other factors.

SUVA takes user interaction to the next level with its advanced Speech-to-Text (STT) and Text-to-Speech (TTS) capabilities. Whether users prefer to speak their commands or listen to chatbot replies, SUVA’s TTS converts voice commands into text, while STT transforms responses into audio messages. This integration not only enhances accessibility but also makes interactions more natural and engaging, leveraging the power of LLM to deliver smooth, dynamic conversations.

Leveraging LLMs as linguistic engines, [F]RAG™ enables SearchUnify Virtual Assistant to swiftly pinpoint gaps in self-service knowledge, ensuring your support knowledge base remains robust and fully equipped to respond to user queries.

Utilizing Federated Retrieval Augmented Generation, SUVA provides links to reference sources for its responses, ensuring credibility and user confidence. By including links as part of the response, users can access the origin of the information, fostering trust and reliability in the system's answers.

SUVA implements a robust feedback system allowing users to express satisfaction or provide detailed feedback. This data aids in refining the virtual assistant’s responses, improving user experience over time.

By identifying and learning from frequently asked questions, SUVA implements a caching mechanism to train on user queries, reducing the reliance on LLMs for repetitive user questions. This ensures cost-effective and fine-tuned interactions.

Apart from textual KB, SUVA lookups information across video and audio files indexed, for generating relevant responses. For enterprises, these content sources can be tutorial videos, product videos, marketing collaterals, conferences, resolved IVR cases, etc. Based on the query match, it will return the text response along with the audio/video files starting from the timestamp from where the context was taken for response. You can also listen to audio and watch the video in the SUVA chat window itself.

SUVA supports multilingual voice interactions with native language detection, which promptly responds to user queries in their preferred language of choice. It understands natural language spoken by the user through voice channels and synthesizes the appropriate response.

By auditing and filtering out queries that are transactional in nature, SUVA segregates intents and stores queries which get multiple hits by caching, thus preventing LLM query hits for repeat queries, hence reducing LLM usage costs.

Instead of following a fixed, predefined path, the chatbot uses the language model to determine the appropriate next step based on the user's query, allowing for a more flexible and adaptive conversation.

SUVA respects role-based access controls to define user roles and associate them with specific access privileges when it comes to responses. By continuously adapting based on user interactions, SUVA analyzes, engagement patterns, and preferences to improve interactions.

In situations where the LLM in question is inaccessible, the fallback mechanism allows SUVA to provide alternative responses or handle user queries appropriately, from your index (stored in a knowledge base or a separate fallback module).

Know the latest trends and best practices in customer support

Ready to Elevate Your Customer & Employee Experiences? Let's Talk