While we were still marveling at the superpowers of Artificial Intelligence (AI), the advent of Large Language Models (LLMs), like ChatGPT, took the world by surprise. Their extraordinary capabilities to generate human-like text have transcended traditional AI boundaries, opening a world of possibilities for modern businesses.

However, despite their remarkable abilities, LLMs have certain limitations. There are instances where they may generate inaccurate or biased information, exhibit a limited grasp of context, and face challenges in delivering concise and precise responses.

This is where ‘prompt engineering’ emerges as a guiding light! It is a process of crafting precise instructions or cues to guide LLMs in generating coherent and contextually relevant responses.

Let’s dig deeper into this futuristic concept!

The Need for Prompt Engineering in Large Language Models

Think of prompt engineering as a magical wand that empowers you to bend the language models to your will.

By carefully designing a prompt, you can provide language models with the necessary context to create optimal responses.

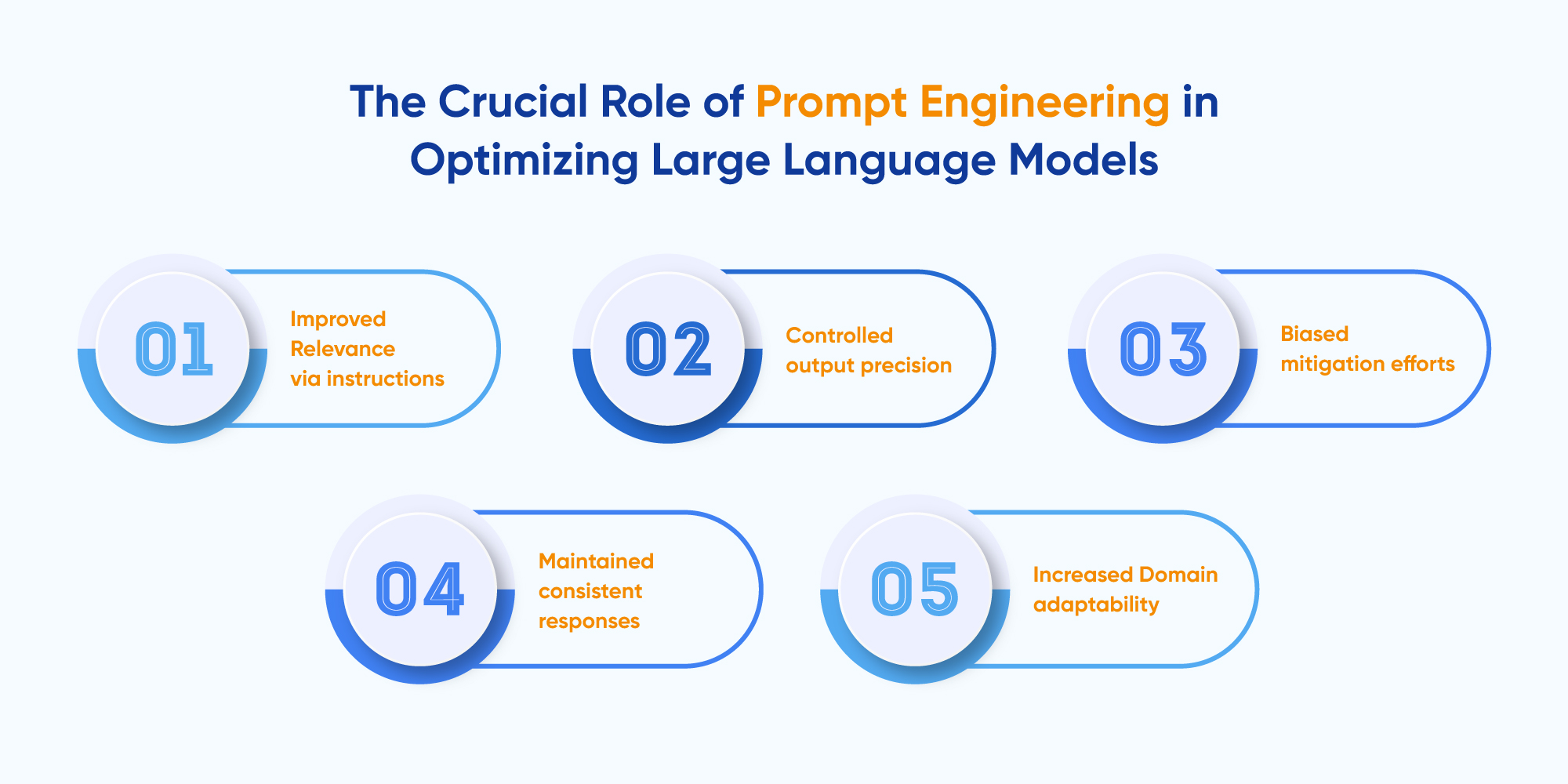

However, the need for prompt engineering goes beyond mere guidance. It empowers users to influence and fine-tune the behavior of LLMs, transforming them into powerful tools that cater to their specific needs.

Given below are some of its significant benefits.

The Anatomy of a Well-Engineered Prompt

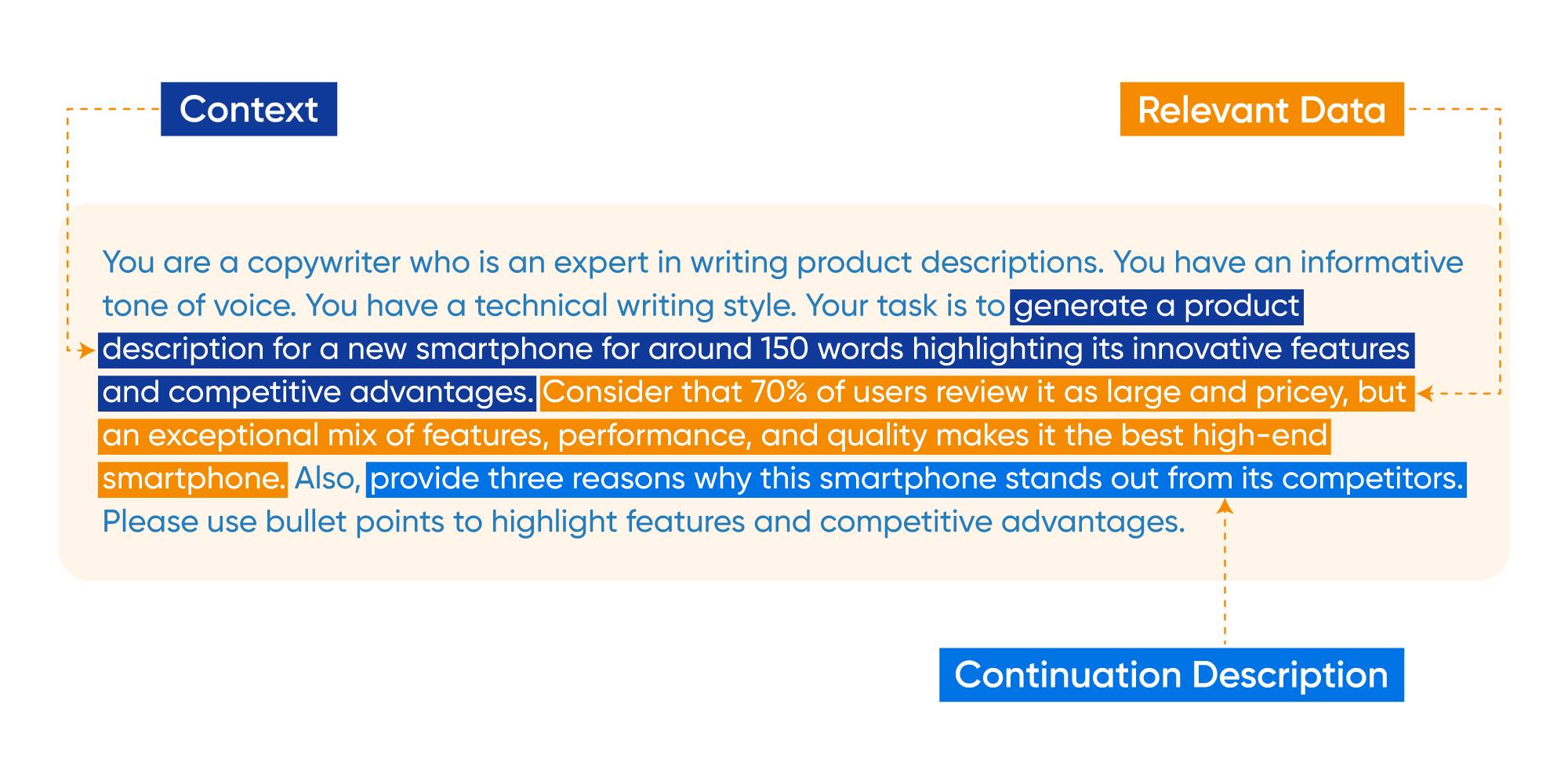

Crafting a good prompt might have you feeling like a skilled architect designing the blueprint for a building. The more details you add to it, the more it will fuel the model’s knowledge and understanding.

Here are three elements that are essential in creating a solid foundation for LLMs:

- Directing the Narrative with Context

The context in a prompt plays a crucial role in providing the necessary background and objectives for the language model.Imagine asking the model to “Write a product description for a new smartphone highlighting its innovative features and competitive advantages.” Here, the context clearly outlines the objective of generating a product description with specific emphasis. - Fueling Learning and Understanding with Relevant DataIncorporating relevant data into the prompt allows the language model to understand from specific information and examples.For example, a prompt could include a dataset of customer reviews about a new smartphone in your prompt. This provides the model with valuable insights that empower it to generate more informed and accurate responses.

- Guiding the Model’s Thought Process with Continuation Description

Continuation description guides the language model on how to proceed or continue from the given context and data. It may include instructions for summarization, keyword extraction, or even engaging in back-and-forth conversations.For instance, a continuation statement like “Please provide three reasons why this smartphone stands out from its competitors” directs the model to generate a response highlighting three key differentiating factors.

A good prompt may look something like this:

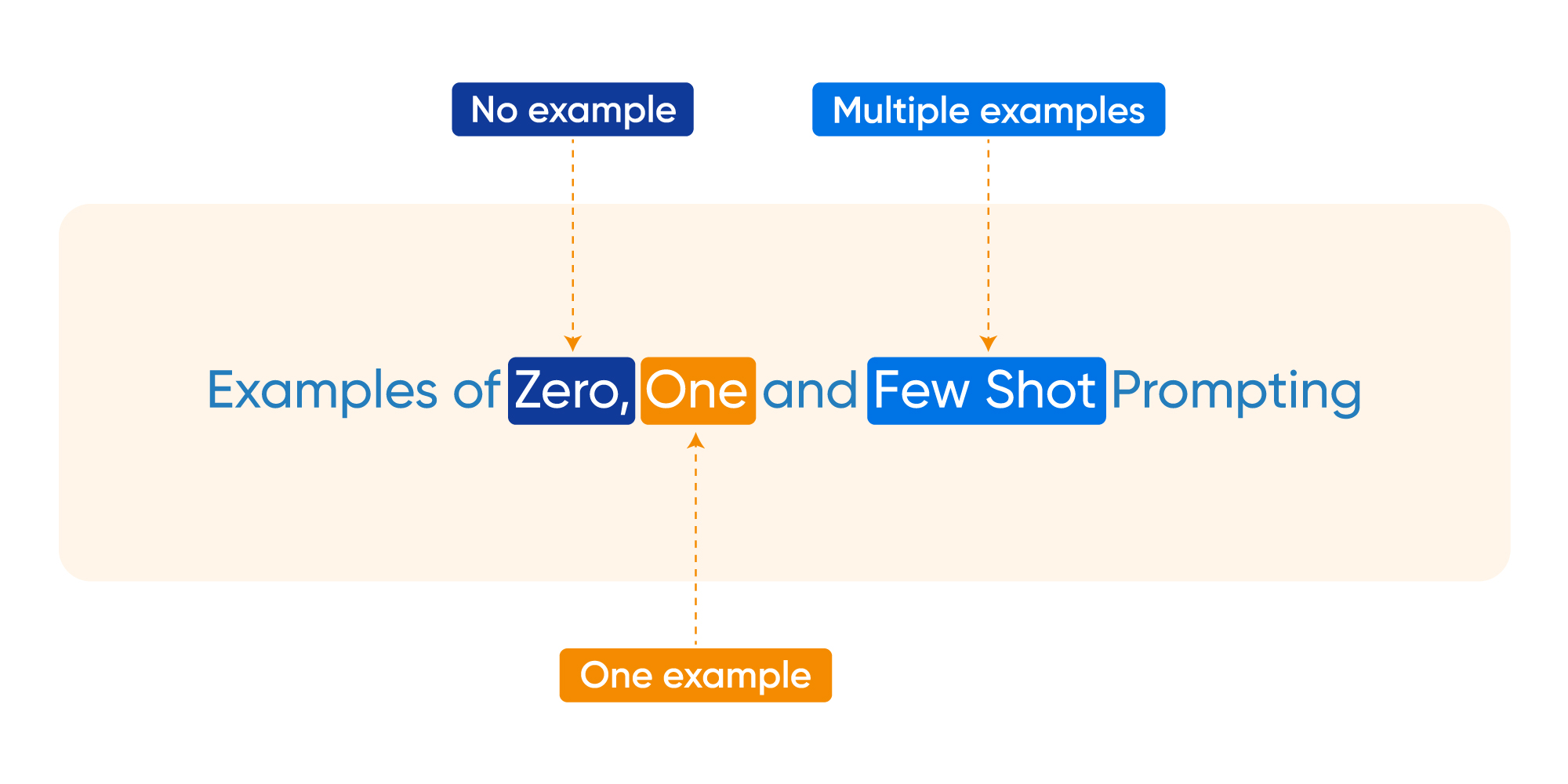

3 Key Techniques in Prompt Engineering

As we go further into the concept of prompt engineering, there are some techniques that can help you master the art. The two major ones include:

Zero-Shot Prompting

Zero-shot prompting allows language models to perform tasks for which they have not been explicitly trained. How does it work? By providing the model with a prompt and a set of target labels or tasks, even if it has no prior knowledge of them. The model then uses its general understanding and reasoning abilities to generate related responses.

Here is an example:

Prompt: Translate the following English phrase to French: ‘Hello, how are you?’

Output: Bonjour, comment ça va

In this zero-shot prompt, the language model demonstrates its ability to generate a reasonable French translation without specific training on translation tasks. It leverages its understanding of both English and French to provide a suitable translation.

One-Shot Prompting

One-shot prompting involves providing a single example in the prompt to enhance the model’s understanding.

For example, if you want the model to create a personalized email response, you can provide a sample email as the prompt. The model can then generate a new response based on the structure, tone, and content of the provided example.

Few Shot Prompting

Few-shot prompting takes the concept of rapid adaptation to a whole new level. In this, instead of bombarding the model with vast amounts of training data, you provide it with only a few labeled examples or demonstrations for a specific task. This allows the model to quickly generalize and adapt to the specific task, showcasing its remarkable ability to learn from limited information.

Let’s say you want a language model to generate movie recommendations based on a few examples. You provide the model with the following prompt:

Prompt: I enjoyed watching ‘Inception’ and ‘The Matrix.’ Recommend me a mind-bending sci-fi movie.

With just these two examples, the model understands the genre and style you’re looking for. Now, when you ask the model for a recommendation with the prompt “Suggest another mind-bending sci-fi movie,” it generates a response:

Output: You might enjoy ‘Blade Runner 2049.’ It’s a visually stunning and thought-provoking sci-fi film that will keep you on the edge of your seat.

How SearchUnify Leverages Prompt Engineering to Drive Results

SearchUnify, a leading enterprise agentic platform, leverages the power of prompt engineering to optimize and improve the performance of LLMs for customer support. These include:

- Text Summarization

- Generative Question Answering

- Analysis of the Next Best Action

Let’s get into the details!

Text Summarization

SearchUnify feeds the language model with specific prompts that capture the objective of the content. As a result, the model effectively extracts important information from articles and creates concise summaries. This empowers customers to quickly grasp key information without having to read through extensive articles, thus reducing the customer effort score.

Generative Question Answering

Generative Question Answering, as the name suggests, generates abstractive or direct answers to customer queries. While LLMs excel in delivering direct answers, they may struggle with more niche or specific questions. To overcome this, SearchUnify employs two effective methods to enhance understanding and accuracy:

- Fine-tuning the LLM on domain-specific textual data to improve its performance.

- Utilizing retrieval-augmented generation to capture relevant information and feed it into the LLM as a secondary source of information.

Analysis of the Next Best Action

Prompt engineering is quite effective in analyzing customer support tickets and determining the most appropriate next steps. By creating prompts that capture relevant details and predefined rules, SearchUnify enhances LLMs’ ability to categorize tickets, prioritize issues, and trigger appropriate actions. This enables businesses to streamline their support operations and minimize response times.

SearchUnify empowers enterprises to streamline their customer support operations, minimize response times, and take the overall customer experience to the next level.

Prompting Success with SearchUnify!

Like a map guiding a traveler through unfamiliar terrain, prompt engineering provides LLMs with the necessary context to generate optimal responses.

Now that you know how to craft a well-designed prompt, you can make the most out of the LLM capabilities to produce insightful content, drive meaningful interactions, and revolutionize customer support!

If you’re excited to witness the impact of prompt engineering firsthand and explore how it can transform your business, request a live demo today!