How would you describe a search experience that just matches keywords? Despicable, right?

Now, imagine asking your friend, “What’s the world’s most expensive car,” and then following up with “What’s its cost?” Your friend immediately understands that “its” refers to the most expensive car in the world: 1963 Ferrari 250 GTO and accordingly replies, “$70M approx.” That was smooth, wasn’t it?

As real-life interactions become scarce, people intend to interact with technology in the most natural way. But human language is ambiguous. That makes understanding the intent behind their search query difficult. However, you can stack the odds in your favor with a semantic search.

What is Semantic Search?

The dictionary meaning of semantics is “the branch of linguistics and logic concerned with meaning.” It attempts to disambiguate the meaning of every word or phrase in a context-dependent cluster carrying an ambiguous label. To laymanize, semantic search is designed to understand online searchers’ intent so they can find the right information at the right time.

Relevance and speed are of the essence for users while leveraging semantic models.

To crack both relevance and speed, a lot goes behind the scenes. Leveraging ML and NLP, the semantic search models take into account users’ location, search history, word variation, synonyms, acronyms, foreign language, etc., to filter out irrelevant results at an early stage. The next section elaborates on how the model functions to deliver the most relevant and contextual information in a jiffy.

Leveraging Semantics to Deliver Relevant Information at Full Tilt

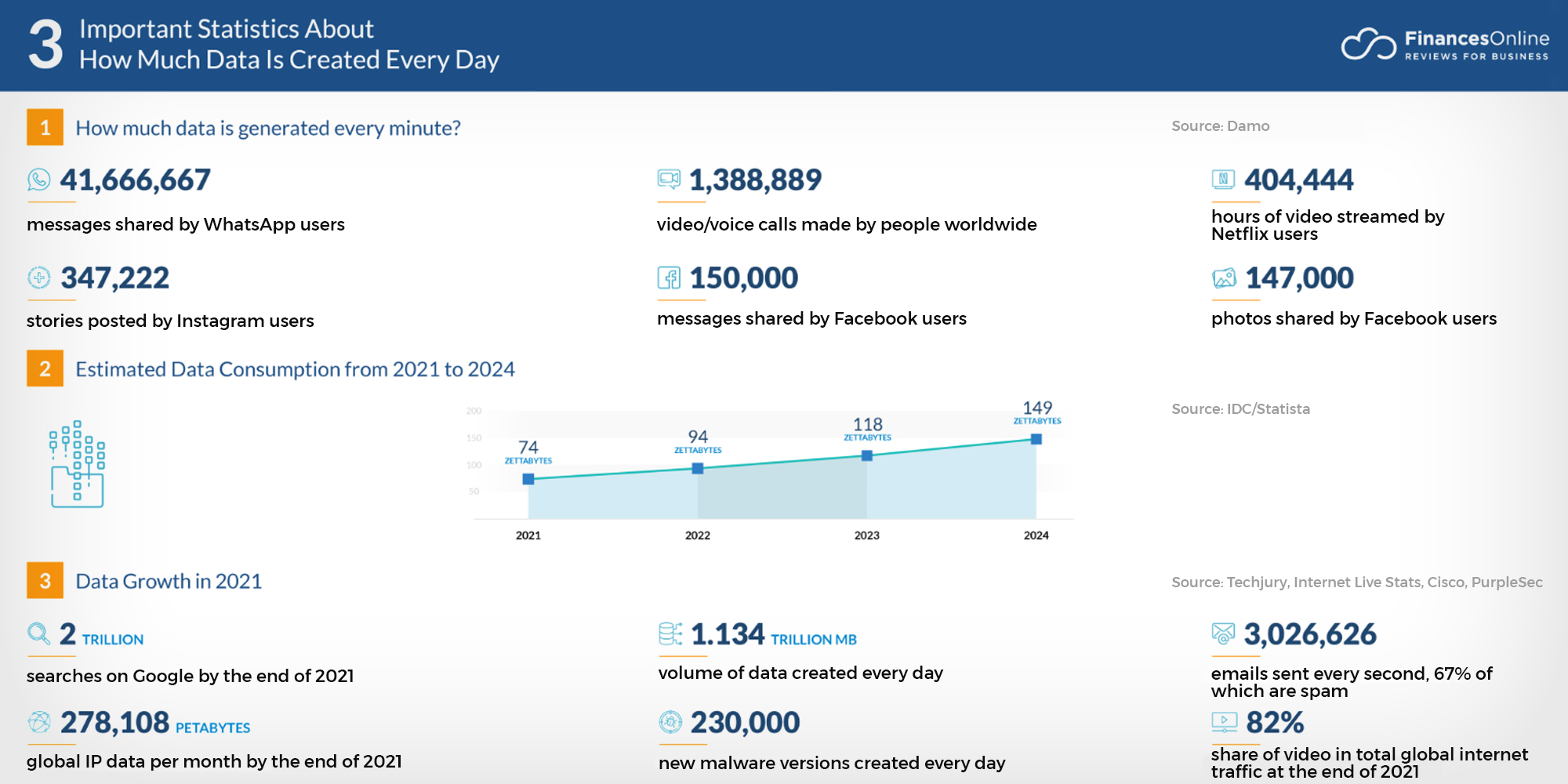

Did you know that the world will produce slightly over 180 zettabytes of data by 2025?

Now, to optimize such a gigantic database, vector representation of data is crucial. It can be done in the following three major ways: One-Hot Encoding; Term Frequency-Inverse Document Frequency (TF-IDF); and Word Embeddings.

1. Word Embeddings

One-hot encoding is the representation of categorical variables as binary vectors. TF-IDF is a traditional vectorizer to measure the originality of a word by comparing the number of times it appears in a document with the number of documents the word appears in.

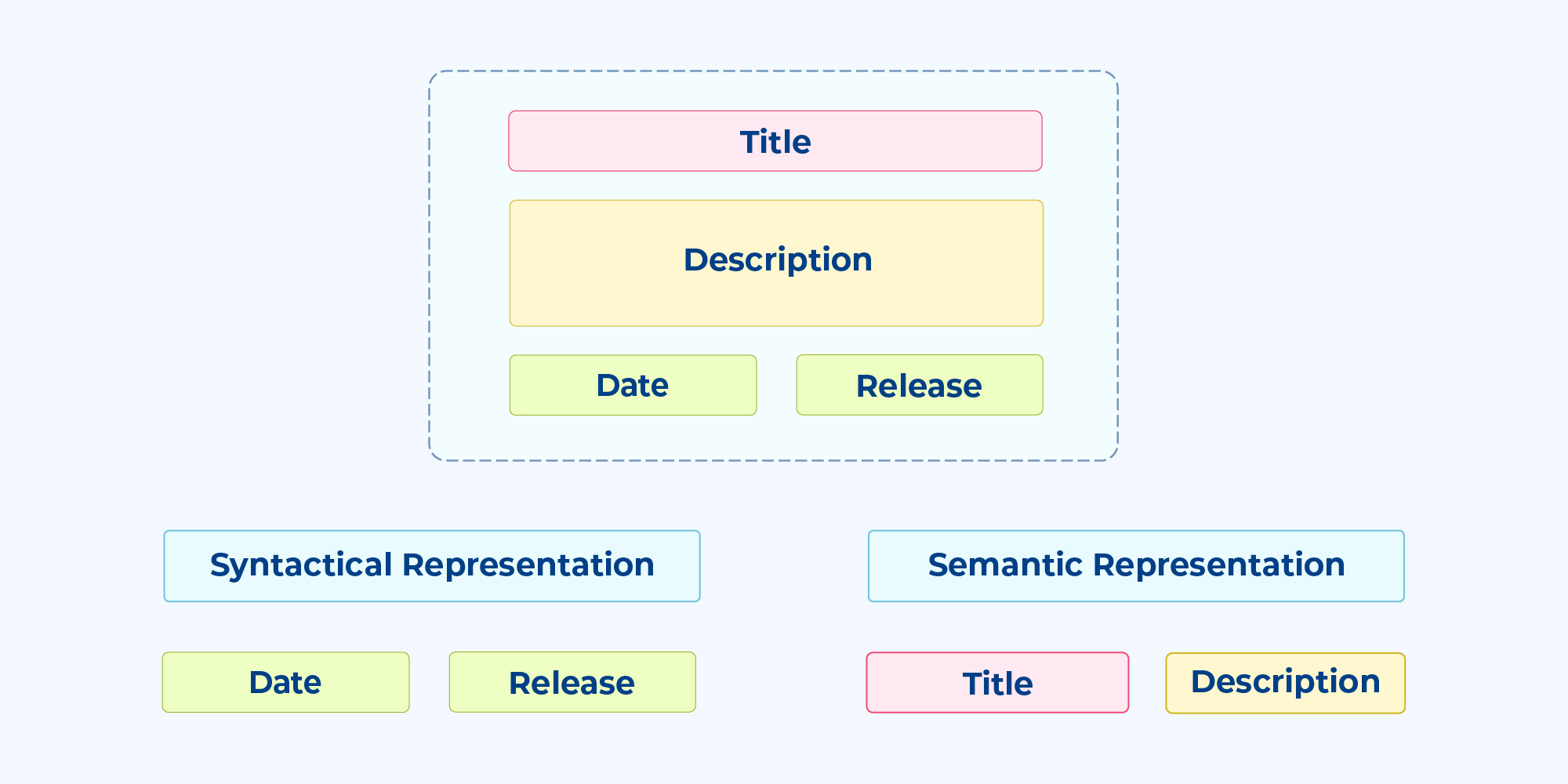

Out of the three, Word Embedding is the best way to vectorize data as it takes into account both syntax and semantics of the corpus to provide concise representation of your free text. Semantic engines map word embeddings to each word. How? They represent each word in real-valued vectors, often tens or hundreds of dimensions, in a predefined vector space. The individual vector or scalar doesn’t mean anything in itself, but its pattern and relative distance from other vectors do.

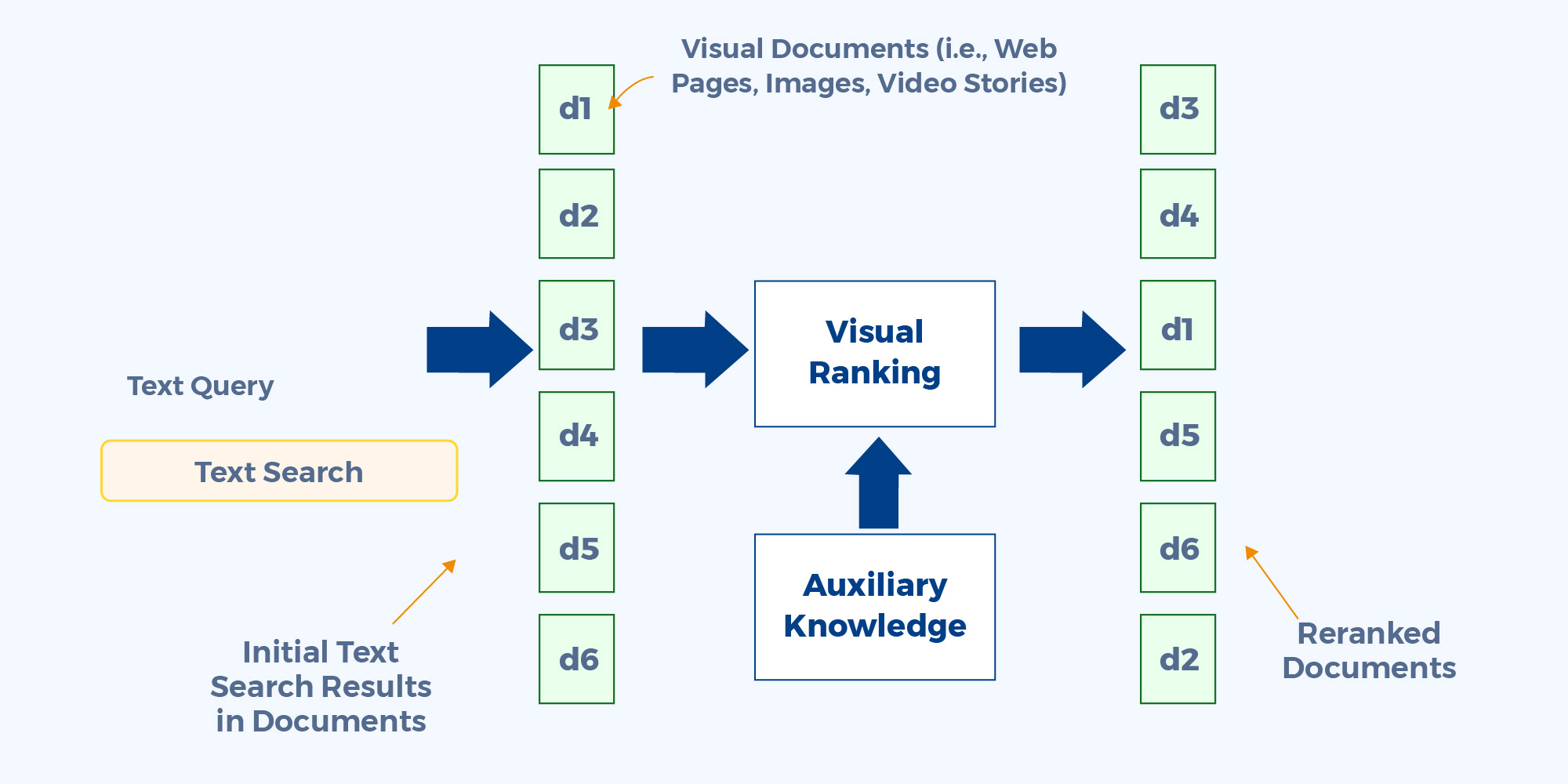

2. Re-Ranking

Deploying semantic search on the entire knowledge base is exorbitant, thanks to O(N) complexity and the cost to understand and create the semantic embeddings for the whole corpus. That is where an amalgamation of token-based candidate generation and re-ranking can work wonders.

You can use a token-based or hashing approach to generate candidates. Then, preselect a specific number of top candidates using cosine distance. Once the top candidates are shortlisted, apply semantics to re-rank them and move complete search to an Approximate Nearest Neighbor (ANN) algorithm using indexes like Annoy, HSNW, LSH, etc.

3. NER

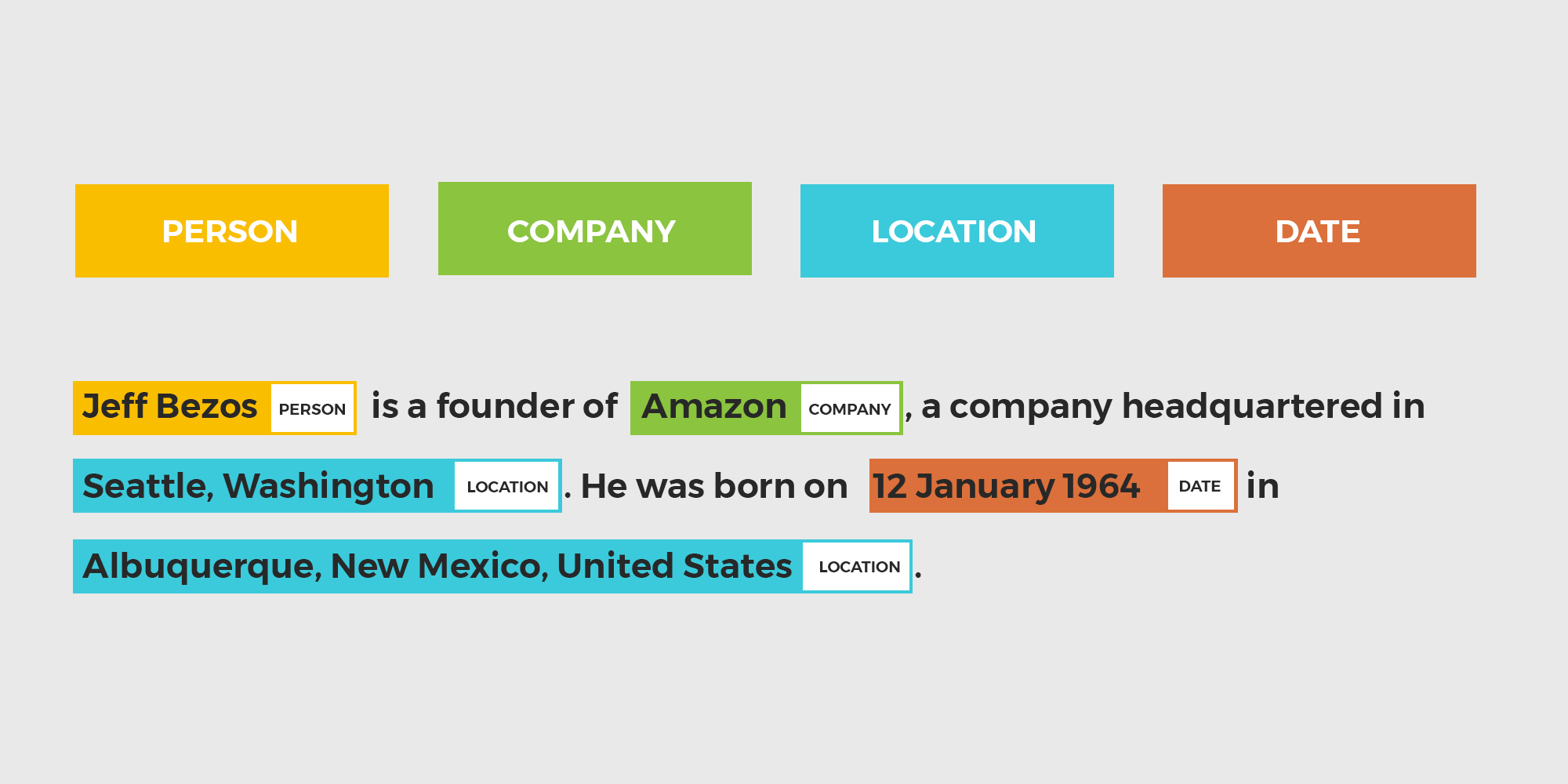

An acronym for Named Entity Representation, NER recognizes words or sentences within a search query that mention entities. For example, in the sentence “Jeff Bezos is a founder of Amazon, a company headquartered in Seattle, Washington. He was born on 12 January 1964 in Albuquerque, New Mexico, United States” we can identify the following four types of entities:

Once a NER model is trained, it identifies the most prominent entities or keywords in a search query. They are then matched with databases that were tagged using annotation. Finally, the documents comprising these tagged entities score better to penalize irrelevant search results.

Want to Understand How SearchUnify Leverages Semantics to Deliver Results at Full Throttle?

Relevance plays a critical role in delivering next-gen self-service and customer support experiences. We take pride in calling ourselves the relevance maestro. No, we aren’t blowing our own trumpets. Here’s a video series on relevance that will help you better understand how we, at SearchUnify, leverage re-ranking, NER, and word embeddings to step up relevance across your domain. Happy listening!